Today, we are surrounded by a lot of online content. From social media posts and news articles to product reviews and video comments, information is constantly flowing. But how do we make sure this information is safe, appropriate, and trustworthy? This is where a powerful tool called an AI Content Filter comes into play. This guide will break down everything you need to know about this technology in simple, easy-to-understand language.

For more details, you can read this blog : 19 billion compromised passwords

What Exactly Is an AI Content Filter?

Let’s start with the basics. An AI Content Filter is a smart software tool powered by Artificial Intelligence (AI). Its main job is to automatically review, analyze, and manage digital content. Think of it as a super-smart security guard for the internet. Unlike older filters that only blocked certain words, this tool understands context and meaning.

For example, traditional filters would block any comment with “idiot,” even if it was part of a book review. But AI-powered systems can tell the difference between harmful intent and harmless usage. They learn from data, improving over time, and can handle not only text but also images, videos, and audio.

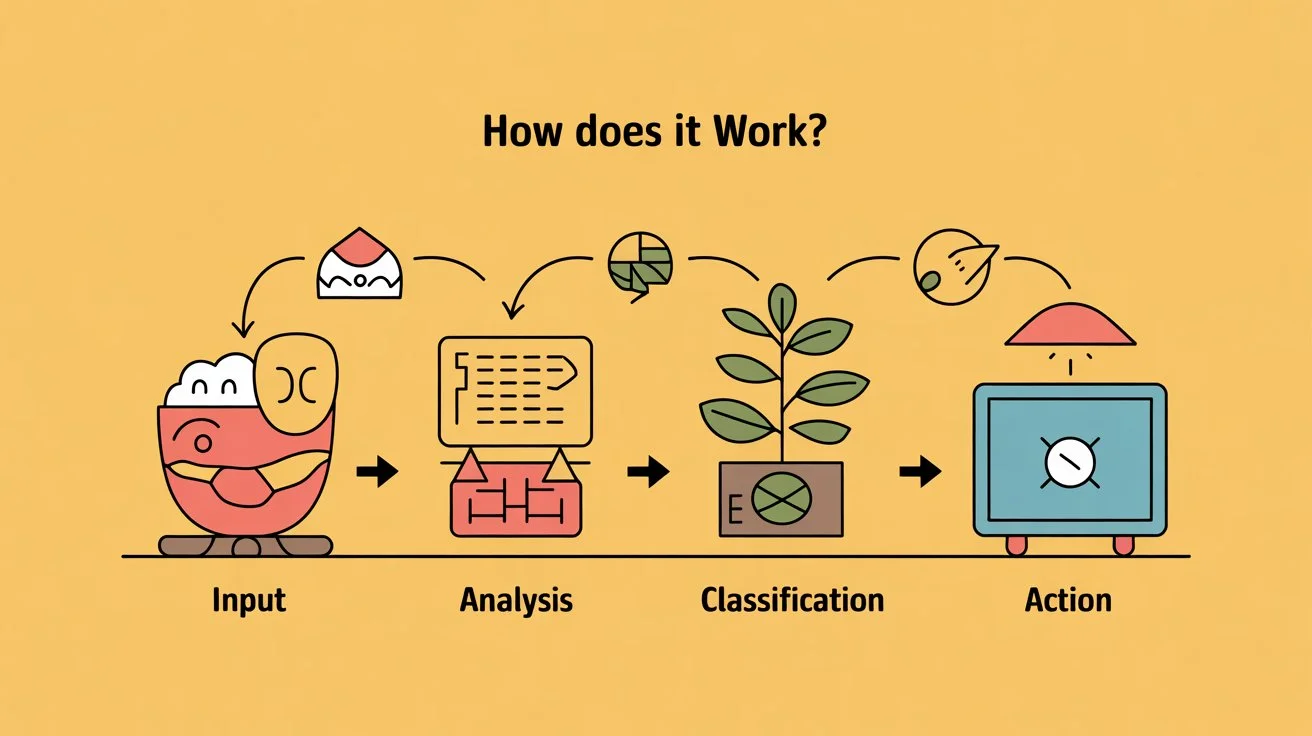

How Does an AI Content Filter Work?

You might think this technology is complicated, but the process is easier to understand than it seems. At its core, it works by learning from massive amounts of data. Here’s a simple step-by-step breakdown:

- Input: The system receives content—such as a social media comment, uploaded video, or blog post.

- Analysis: It breaks down the words, tone, and even visuals. Then, it compares the content against patterns it has learned.

- Classification: The AI decides if the content is safe, offensive, spam, or unsuitable for certain audiences.

- Action: Based on the results, the system approves the content, blocks it, or sends it for human review.

This whole process takes only seconds. That speed is what makes it so effective for platforms that deal with millions of posts every day.

Why Do We Need AI Content Filters?

The internet is open to everyone, which is both its greatest strength and its biggest risk. Here’s why this technology is so important:

- Protecting users: Children and vulnerable groups can be shielded from harmful, violent, or explicit content.

- Fighting spam and fraud: Fake reviews, scams, and phishing attempts can be detected and stopped automatically.

- Maintaining brand reputation: Businesses ensure their websites and social media pages remain positive spaces.

- Reducing hate speech and bullying: Harmful comments are flagged quickly, making communities safer.

- Saving resources: Instead of humans reviewing every single post, AI handles the bulk, allowing human moderators to focus on complex issues.

The Amazing Benefits of Using an AI Content Filter

Adopting such a tool provides several major advantages:

- Scalability: Humans can check only some content, but AI can review millions nonstop.

- Consistency: The rules stay the same for every case, removing personal bias or inconsistency.

- Context understanding: The system recognizes sarcasm, slang, and even humor, reducing mistakes.

- Proactive safety: Harmful material is flagged the moment it appears, protecting users instantly.

In short, this technology works like an invisible shield that keeps digital spaces clean, fair, and welcoming.

The Challenges and Things to Consider

Like all tools, AI content filtering has its limitations. Some of the main challenges include:

- Over-blocking: Safe content might sometimes get blocked by mistake (false positives).

- Under-blocking: Harmful posts may slip through (false negatives).

- Bias risks: If trained on biased data, the AI could make unfair decisions.

- Need for humans: AI isn’t perfect. The best systems combine automation with human judgment for tricky cases.

These challenges show why ongoing improvements and ethical training are so important.

Real-World Uses of AI Content Filtering

This technology is already at work in many online spaces we use daily. For example:

- Social Media: Platforms like Facebook, YouTube, and TikTok rely on AI to moderate billions of posts, keeping communities safe.

- E-Commerce: Online stores filter out fake reviews and scam attempts to build customer trust.

- Gaming Platforms: To fight toxic chat behavior, AI filters prevent harassment and bullying during live interactions.

- Education Tools: Online learning platforms use filters to make sure students only see appropriate study materials.

These examples show how the AI Content Filter is not just a technical tool—it is shaping safer digital environments in many industries.

Conclusion: A Powerful Partner for a Better Internet

The AI Content Filter is not about silencing voices—it’s about protecting users and building trust online. It works as a first line of defense for websites, apps, and communities, making sure harmful material doesn’t ruin the digital experience.

While it does have challenges, such as occasional mistakes or bias, combining AI with human oversight makes it much more reliable. As artificial intelligence continues to improve, we can expect even smarter filters that provide more accurate, fair, and safe results.

In the end, this tool plays a critical role in creating a digital world where everyone—kids, families, businesses, and creators—can interact without fear of abuse or harmful content.

Frequently Asked Questions (FAQs)

Q1: Can an AI content filter understand multiple languages?

Yes! Many filters are trained on multilingual data. Accuracy may vary, but advanced systems work well across different languages.

Q2: Does this mean human moderators will lose their jobs?

Not at all. AI handles repetitive cases, while humans focus on sensitive, complex situations. Together, they form the best moderation team.

Q3: How can I use one for my website?

Many companies, such as Google, Amazon, and Microsoft, provide easy-to-use APIs that you can integrate into your website or app.

Q4: Does filtering affect user privacy?

Ethically designed filters only analyze content for safety patterns, not personal details. The goal is content moderation, not surveillance.

Q5: What’s the difference between a regular filter and an AI content filter?

A regular filter simply blocks words from a list. For example, it may block the word “idiot” in any sentence. An AI-based system understands context and can allow harmless uses while blocking actual insults.